The 12 Laws of AI – Law 4: The Law of the Imperfect Mirror

October 24, 2023

The following is the third part in our blog series “The 12 Laws of AI.” The series is a set of practical and philosophical guidelines for DMOs to work from as they explore the opportunities and challenges of artificial intelligence (AI). Check out the second part of this series, “The 12 Laws of AI – Law 2: Humble Beginnings and Law 3: Transparency.“

Welcome back to Madden’s “The12 Laws of AI” series! Over the past few weeks we’ve discussed what AI is, what it’s not, the importance of starting small (and choosing the right tool for the job), and finally the need for transparency as we integrate these tools into our daily operations and strategic thinking. This 6th wave of innovation, with AI at its forefront, is moving so rapidly that it can feel a bit like we’re building the airplane as we fly it. The concerns and issues, while not insignificant, need to be understood and acknowledged rather than utilized as reasons to push off adoption of the various AI tools available. It is truly a case where the juice is, in fact, worth the squeeze!

Law 4: The Law of the Imperfect Mirror

AI is an attempted reflection of humanity’s own mind, bearing our brilliance and our fallibilities. It may be swayed by prejudice, misinformation, or even invent the truth when in doubt. The wise will approach AI with discernment, skeptical eyes, and a probing spirit. An old Russian proverb said it best: “Trust, but verify.”

AI: More Than Just a Computer on Steroids

A pervasive fear about artificial intelligence is that it will evolve one day to replace humans as the dominant ‘species’ on the planet. While that debate is beyond the scope of this particular blog series, it’s a fear that I don’t think we’ll need to be too preoccupied with inside our lifetimes. On the other hand, misconceptions about what AI is, what it’s not, the complex issues surrounding how it works, and what it gets wrong are certainly topics that any professional utilizing these tools needs to have a comfortable, working knowledge of. People’s expectations often lump AI in with traditional computing, expecting features like reliability, precision, speed, and accuracy, complete with bug warnings. However, AI differs in numerous critical aspects from the conventional computers we’ve grown accustomed to. In short, AI is a lot more like us than it is your Macbook or Microsoft Word. Let’s delve into some of these distinctions.

Design and Learning Mechanisms

Perhaps the most fundamental difference between AI and traditional computing lies in their designs and learning capabilities. AI platforms, such as ChatGPT and Google’s Bard, are engineered to emulate neural networks. These networks draw inspiration from the human brain’s architecture, enabling AI to “learn” from data and real-world experience. This learning model allows AI systems to evolve over time and adapt to new situations. We’ve been using AI systems like this in everyday life for a long time now — it’s how Amazon understands over time what sort of products you might be interested in buying based on your past purchasing habits or how Spotify recommends new songs for you based on things you regularly listen to. This data input/user feedback loop enables a platform like ChatGPT to learn over time what a correct response is, or to even correct responses it got wrong in the past — even in real time as you’re interacting with it.

Conversely to the examples above about AI, traditional computers function based on specific, pre-defined programming. For example, Microsoft Word’s grammar suggestions won’t evolve to include current linguistic trends unless there is a software update. It can’t autonomously recognize and understand the use of newly coined words like “yeet” in a sentence (that actually makes computers a little bit more like teenage parents, your humble author included haha).

So, while AI is certainly more capable than traditional computers of learning and gaining new capabilities, remember…just because AI system design is inspired by the gray stuff between your ears doesn’t mean it comes anywhere remotely close to the universal wonder that is the human brain. AI is still limited to our technological abilities to create and design these systems and the popular opinion in tech circles is that AI development may be hitting a plateau for the time being.

Errors and Adaptability

A common analogy describes AI as an eager-to-please puppy. It aims to provide the answer it anticipates you desire. While traditional programs like Microsoft Word can help you spell “cappuccino” correctly, they can’t offer directions to the nearest Starbucks—because that’s beyond their programming scope.

AI systems, despite their susceptibility to errors, possess a unique strength: adaptability. As these platforms accumulate more data and experience, they continually refine their responses. For example, recommendation engines on e-commerce websites evolve to show more accurate product suggestions as they learn from user behavior.

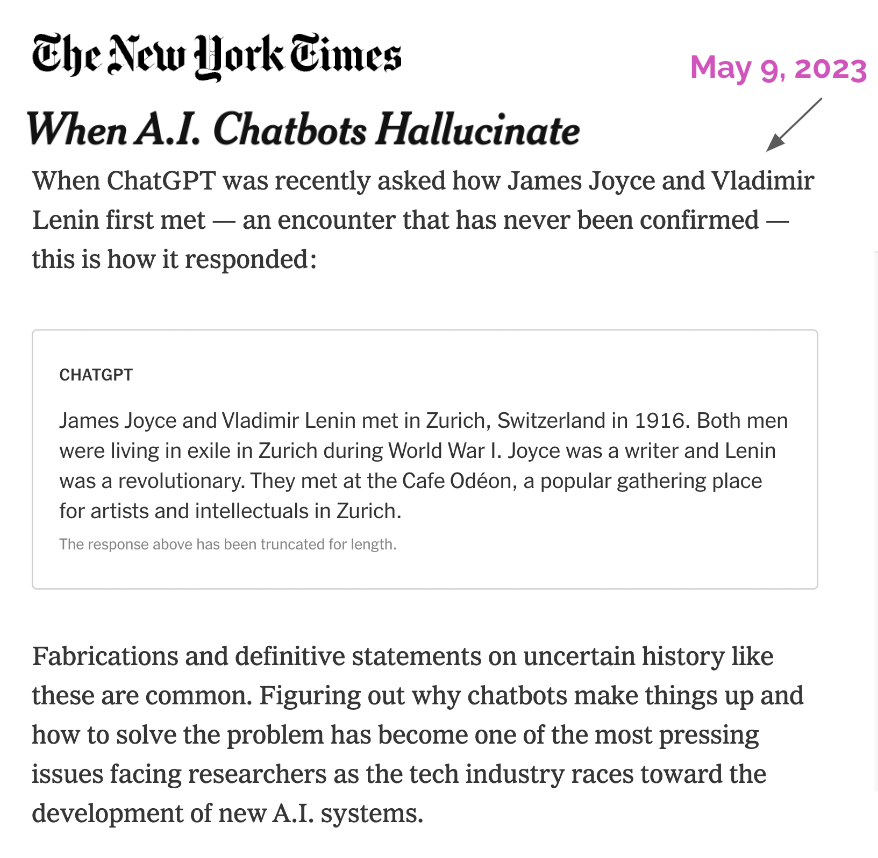

However, it’s important to note a crucial limitation — AI “hallucinations,” which refer to false facts or incorrect answers. Platforms like ChatGPT and Bard won’t necessarily signal when they’ve provided a wrong answer. This “hallucination” is a challenge that the AI industry is actively working to mitigate, but it remains a work in progress. An example of how this is improving is illustrated by comparing a May 9, 2023 New York Times article, “When A.I. Chatbots Hallucinate” to results of the same test run a few months later.

The New York Times article focused on a question posed to ChatGPT about a meeting between author James Joyce and Vladimir Lenin. ChatGPT provided a full description and details of the meeting…but the meeting never officially happened, according to historical records. ChatGPT essentially made up the facts around this uncertain piece of history.

And yet, a few months later in August, I asked ChatGPT the exact same question. This time, the response was far more nuanced and acknowledged that no official meeting was ever recorded:

Encouragingly, this particular example shows that progress and improvement are happening, while underscoring the need to do your homework if using AI tools to perform research on a topic, to assist in writing content, or help with data analysis. Again…trust, but verify!

AI Bias: Yes, it’s a thing. No, it’s not (exactly) what you think.

It’s important that we start a discussion about AI bias with a frank assessment of what exactly we mean here. If you recall, we covered the fact that AI platforms are not sentient and essentially operate as “prediction machines” that combine user input, training data and algorithmic models to predict the desired answer to a query. AI is not inherently biased in the way we are accustomed to dealing with bias as a social issue. AI doesn’t judge a user, or their query, nor does it have any “personal” opinions on social issues. So where does the acknowledged bias issue come from? It’s complex, but the generally accepted answer in AI and computer science circles is that AI learning models are susceptible to strictly following the data they’re trained on. In other words, if it is trained to recognize a dolphin, using hundreds of images of giraffes (and being told these are dolphins) it will make the mistake of providing you a picture or description of a giraffe when you asked for one of a dolphin. This might sound ridiculous, but in a simplistic way it explains why, if you ask what a Fortune 500 CEO “looks like” the results may be a homogenous collection of older, white males wearing suits.

Interestingly, when asking OpenAI’s DALL-E2 AI image generator to produce a collection of images of destination marketing organization CEOs, 95% of the results were individuals of various Asian ethnicities, with just a single person of color rounding out the requested portraits. In the case of this particular example, it could be that the data the AI model was trained on included a large number of images of CEOs from Asian destination marketing organizations, or possibly that the naming or tagging convention of the training data was skewed in some way. These kinds of data biases can produce misleading or incorrect outputs, and in more critical applications than image generation, this can have significant repercussions.

But there’s more to this story.

The potential of AI bias can extend beyond just the training data. Human biases, whether we are aware of them or not, can creep into the AI systems we create. For instance, back in 1988, a British medical school was found guilty of discrimination because the computer program they used to determine which applicants were invited for interviews was biased against women and those with non-European names. This program was designed to mimic human admissions decisions with an accuracy rate of 90 to 95%. The issue wasn’t that the algorithm was biased in itself, but that it was trained on biased human decision-making.

Moreover, biases can stem from flawed data sampling. As an example, MIT researchers found that facial analysis technologies exhibited higher error rates for minorities, particularly minority women, which might be due to unrepresentative training data. In the end…it’s not AI that is “deciding” to be biased or not; remember, it’s not sentient or self-aware.

So, what can we do about this?

For individual DMOs, it might seem like there’s limited room to address AI shortcomings directly. Searching online for best practices doesn’t yield many actionable steps tailored for our industry. However, with a keen understanding and some practical measures, your organization can safeguard the use of AI tools. When employing AI platforms for research, data analysis, or other fact-reliant tasks, it’s imperative to meticulously review and verify the AI responses or creative output (as when using image generation tools). The more public the content or analysis you’re working on, the more you should look to audit the AI’s output. In contrast, for creative tasks like brainstorming or writing assistance, the emphasis on factual accuracy might be reduced, but a discerning eye remains essential.

Addressing AI bias requires a two-pronged approach. First, it’s about education: empower team members with knowledge about potential biases in AI results. Make them aware of how AI systems might inadvertently lean a certain way due to their training data. Second, maintain a rigorous review process. Before deploying any AI-generated content, scrutinize and rectify content that might inadvertently reflect biases. This ensures that the content aligns with the DMO’s values and objectives, fostering trust with the target audience.

Whew! That’s a lot to digest and may feel a little off-putting about using AI tools…but fear not! As we’ll explore in the upcoming laws, the payoff that AI platforms can have on our organizations is truly worth the extra care required to navigate the challenges we’ve covered here. It’s important to stay as current as you can on AI news, especially if you are a CEO, COO or CMO leading teams that are employing these tools. Here’s a few places I’ve found useful to visit on a regular basis:

- AI News

- Wired’s AI Section

- Forbes AI

- MIT Tech Review AI

- Harvard Business Review: (no official section, but a google search of HBR AI will produce some truly terrific articles on the subjects covered above)

Next up, we’ll begin to explore why these new AI platforms are worth the effort, despite the challenges.

Other blogs in this series:

Law 1: AI is the Tool, Not the Craftsman

Law 2: Humble Beginnings and Law 3: Transparency

Law 5: The Law of Liberated Potential and Law 6: The Law of Collective Empowerment

Law 7: The Law of the Artful Inquiry

Law 8: The Law of Constructive Command

Law 9: The Law of Data Enlightenment

Law 10: The Law of Democratized Innovation

Law 11: The Law of Creative Exploration

Law 12: The Law of Ascendency

Note: This collection of “laws” on AI incorporate insights from my research and writing on the topic. To make it as memorable as I could — and to demonstrate one of the many powerful utilities these tools offer — I asked ChatGPT 4.0 to style my writing in the voice of Robert Greene, author of the best-selling book “The 48 Laws of Power.” I hope you will agree that each of the Laws is a bit more memorable with this distinct style being employed. It’s crucial that we embrace these new tools and transparently acknowledge how they improve our critical thinking and public sharing of ideas.